|

Tina Nabatchi’s report (2012), published by the IBM Center for The Business of Government, provides a practical assessment guide for government program managers so they can assess whether their efforts are making a difference. The report lays out evaluation steps for both the implementation and management of citizen participation initiatives as well as how to assess the impact of a particular citizen participation initiative. An appendix provides helpful worksheets, as well.

Agencies in coming years will face greater fiscal pressures and they will also face increased citizen demands for greater participation in designing and overseeing their policies and programs. Understanding how to most effectively engage citizens in their government will likely increase in importance. Nabatchi hopes this evaluation guide will be a useful framework for government managers at all levels in helping them determine the value of their citizen participation initiatives.

0 Comments

At the 2008 National Conference on Dialogue & Deliberation, we focused on 5 challenges identified by participants at our past conferences as being vitally important for our field to address. This is one in a series of five posts featuring the final reports from our “challenge leaders.”

Systems Challenge: Making dialogue and deliberation integral to our systems Most civic experiments in the last decade have been temporary organizing efforts that don’t lead to structured long-term changes in the way citizens and the system interact. How can we make D&D values and practices integral to government, schools, organizations, etc. so that our methods of involving people, solving problems, and making decisions happen more predictably and naturally? Challenge Leaders: Will Friedman, Chief Operating Officer of Public Agenda Matt Leighninger, Executive Director of the Deliberative Democracy Consortium Report on the Systems Challenge: Although no formal report was submitted, Will and Matt identified the following as common themes that emerged in this challenge area:

At the 2008 National Conference on Dialogue & Deliberation, we focused on 5 challenges identified by participants at our past conferences as being vitally important for our field to address. This is one in a series of five posts featuring the final reports from our “challenge leaders.”

Evaluation Challenge: Demonstrating that dialogue and deliberation works How can we demonstrate to power-holders (public officials, funders, CEOs, etc.) that D&D really works? Evaluation and measurement is a perennial focus of human performance/change interventions. What evaluation tools and related research do we need to develop? Challenge Leaders: John Gastil, Communications Professor at the University of Washington Janette Hartz-Karp, Professor at Curtin Univ. Sustainability Policy (CUSP) Institute The most poignant reflection of where the field of deliberative democracy stands in relation to evaluation is that despite this being a specific ‘challenge’ area, there was only one session in the NCDD Conference aimed specifically at evaluation – ‘Evaluating Dialogue and Deliberation: What are we Learning?’ by Miriam Wyman, Jacquie Dale and Natasha Manji. This deficit of specific sessions in evaluation at the NCDD Conference offerings is all the more surprising since as learners, practitioners, public and elected officials and researchers, we all grapple with this issue with regular monotony, knowing that it is pivotal to our practice. Suffice to say, this challenge is so daunting that few choose to face it head-on. Wyman et al. made this observation when they quoted the cautionary words of the OECD (from a 2006 report): “There is a striking imbalance between time, money and energy that governments in OECD countries invest in engaging citizens and civil society in public decision-making and the amount of attention they pay to evaluating the effectiveness and impact of such efforts.” The conversations during the Conference appeared to weave into two main streams: the varied reasons people have for doing evaluations and the diverse approaches to evaluation. A. Reasons for Evaluating The first conversation stream was one of convergence or more accurately, several streams proceeding quietly in tandem. This conversation eddied around the reasons different practitioners have for conducting evaluations. These included: “External” reasons oriented toward outside perceptions:

“Internal” reasons more focused on making the process work or the practitioner’s drive for self-critique:

B. How to Evaluate The second conversation stream at the Conference – how we should evaluate – was more divergent, reflecting some of the divides in values and practices between participants. On the one hand there was a loud and clear stream that stated if we want to link citizens’ voices with governance/decision making, we need to use measures that have credibility with policy/decision-makers. Such measures would include instruments such as surveys, interviews and cost benefit analysis that applied quantitative, statistical methods, and to a lesser extent, qualitative analyses, that could claim independence and research rigor. On the other hand, there was another stream that questioned the assumptions underlying these more status quo instruments and their basis in linear thinking. This stream inquired, Are we measuring what matters when we use more conventional tools? For example, did the dialogue and deliberation result in:

From these questions, at least three perspectives emerged:

An ecumenical approach to evaluation may keep peace in the NCDD community, but one of the challenges raised in the Wyman et al. session was the lack of standard indicators for comparability. What good are our evaluation tools if they differ so much from one context to another? How then could we compare the efficacy of different approaches to public involvement? Final Reflections Along with the lack of standard indicators, other barriers to evaluation also persist, as identified in the Wyman et al. session:

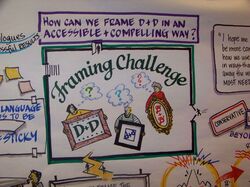

Wyman et al commenced their session with the seemingly obvious but often neglected proposition that evaluation plans need to be built into the design of processes. This was demonstrated in their Canadian preventative health care example on the potential pandemic of influenza, where there was a conscious decision to integrate evaluation from the outset. The process they outlined was as follows: Any evaluation should start with early agreement on areas of inquiry. This should be followed by deciding the kinds of information that would support these areas of inquiry, the performance indicators; then the tools most suited; and finally the questions to be asked given the context. A key learning from the pandemic initiative they examined was “In a nutshell, start at the beginning and hang in until well after the end, if there even is an end (because the learning is ideally never ending).” In terms of NCDD, we clearly need to find opportunities to share more D & D evaluation stories to increase our learning, and in so doing, increase the strength and resilience of our dialogue and deliberation community.  At the 2008 National Conference on Dialogue & Deliberation, we focused on 5 challenges identified by participants at our past conferences as being vitally important for our field to address. Our leader for the “Framing Challenge” was Jacob Hess, then-Ph.D. Candidate in Clinical-Community Psychology at the University of Illinois. Jacob wrote up an in-depth report on what was discussed at the conference in this challenge area, as well as his own reflections as a social conservative who is committed to dialogue. Download the 2008 Framing Challenge Report (Word doc). Framing Challenge: Framing this work in an accessible way How can we “frame” (write, talk about, and present) D&D in a more accessible and compelling way, so that people of all income levels, educational levels, and political perspectives are drawn to this work? How can we better describe the features and benefits of D&D and equip our members to effectively deliver that message? Addressing this challenge may contribute greatly to other challenges. Challenge Leader: Jacob Hess, then-Ph.D. Candidate in Clinical-Community Psychology at the University of Illinois Here is a taste of Jacob’s thoughtful report: As a social conservative who has found a home in the dialogue community, I was invited to be “point person” for this challenge at the 2008 Austin Conference. The different ways we talk about, portray and frame dialogue can obviously have major differences in whether diverse groups feel comfortable participating in D&D venues (including our coalition). Of course, conservatives are only one example of a group for whom this challenge matters; others who may struggle with our prevailing frameworks include young people, those without the privilege of education, minority ethnic communities, etc. As I learned myself, even progressive people may be “turned off” from a particular framing. After becoming involved in dialogue, I would share what I was learning with classmates and professors during our “diversity seminar.” When hearing about dialogue framed from their white, male, conservatively religious classmate, several of my classmates decided that dialogue must really be a conservative thing—i.e., an attempt to placate, muffle or distract from activism and thereby indirectly reinforce the status quo (a valid concern!). Ultimately, however, in each case I believe these fears are less inherent to dialogue or deliberation itself than to a particular framing of the same. Does dialogue inherently serve either a radical or status quo agenda? Does it require someone to either believe or disbelieve in truth? Does it implicitly cater to one ethnic community or another—one age group above another—one gender or another? I think not. Having said this, little cues in our language and framing may inadvertently communicate otherwise. . . After being identified by the NCDD community (alongside 4 other key challenges), the articulation of this challenge was explored and elaborated in an online discussion of members of NCDD; ultimately, the challenge came to read: “Articulating the importance of this work to those beyond our immediate community (making D&D compelling to people of all income levels, educations levels, political perspectives, etc.) — and helping equip members of the D&D community to talk about this work in an accessible and effective way.” This “challenge #2” is intended to draw our collective attention to how we can make dialogue and deliberation more accessible to more communities—not necessarily by radically altering the practice itself, but my making sure the packaging, the framing and presentation doesn’t inadvertently scare them away. As reframed by Steven Fearing, the “core question” for this challenge becomes: “How can we frame (speak of) this work in a more accessible and compelling way, so that people of income levels, educational levels, and political perspectives are drawn to D&D?” At the 2008 National Conference on Dialogue & Deliberation, we focused on 5 challenges identified by participants at our past conferences as being vitally important for our field to address. This is one in a series of five posts featuring the final reports from our “challenge leaders.”

Inclusion Challenge: Walking our talk in terms of bias and inclusion What are the most critical issues of inclusion and bias right now in the D&D community and how do we address them? What are the most critical issues related to bias, inclusion, and oppression in the world at large and how can we most effectively address these issues through the use of dialogue and deliberation methods? Challenge Leader: Leanne Nurse, Program Analyst for the U.S. Environmental Protection Agency |

Categories

All

|

Follow Us

ABOUT NCDD

NCDD is a community and coalition of individuals and organizations who bring people together to discuss, decide and collaborate on today's toughest issues.

© The National Coalition For Dialogue And Deliberation, Inc. All rights reserved.

© The National Coalition For Dialogue And Deliberation, Inc. All rights reserved.

RSS Feed

RSS Feed